San Francisco, Aiming to empower businesses with deep learning capabilities, Intel on Tuesday launched the second generation of Xeon scalable processors, including the flagship Xeon Platinum 9200 processor with 56 cores, at its “Data-Centric Innovation Day” here.

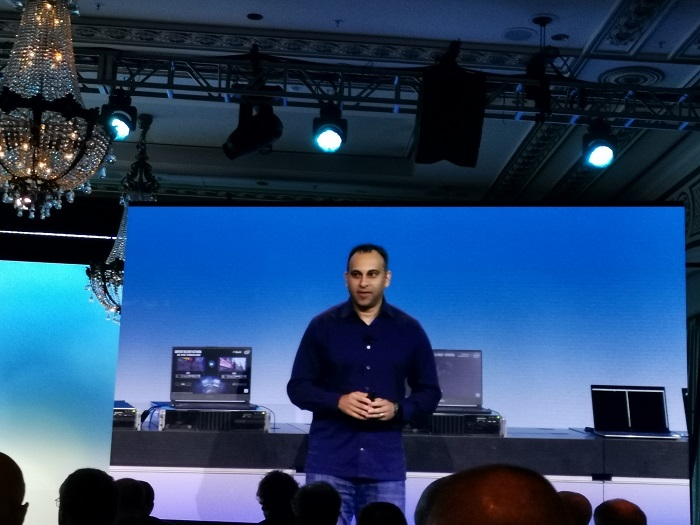

“This is a big day for us. It is the first truly data-centric launch in our history,” Navin Shenoy, Executive Vice President, Intel and General Manager, Data Center Group told reporters here adding “this thing is a beast.”

The new Intel Xeon Scalable processors with built-in Deep Learning Boost (DLB) technology add AI capabilities for the next era of data-driven Internet of Things (IoT) edge platforms. It also accelerates performance of up to 14 times for deep learning inference workloads such as object detection, speech recognition, and image classification.

“Half the world’s data was created in the last two years and only two per cent of it has been analysed. That leads us to great optimisation,” added Shenoy.

The flagship Xeon Scalable Platinum 9200 processor has been designed for high-performance computing (HPC), advanced analytics, artificial intelligence (AI) and high-density infrastructures.

It has 12 memory channels that aim to deliver breakthrough levels of performance with the highest Intel architecture FLOPS per rack along with the highest DDR4 native memory bandwidth support of any Intel Xeon processor platform.

“Intel Speed Select” technology provides enterprise and infrastructure-as-a-service (IaaS) providers more flexibility to address evolving workload needs. The new Xeon Scalable processors can be configured to performance settings of core counts and frequencies, thus, creating “three CPUs in one”.

“The portfolio responds to the growing demand for compute but also the diversifying customer needs that are emerging as computing expands. “We’ve seen an explosion in the types of workloads as customers are running,” noted Shenoy.

Other Xeon Processors include the Intel Xeon Platinum Processor (8200 Series) that features business agility, hardware-enhanced security and built-in AI. It also has up to 28 cores and 2, 4 and 8+ socket configurations and top memory bandwidth.

He also said the Intel DL Boost, or special deep learning instructions, in the Intel Xeon Platinum 8200 processor can elevate the AI processing performance by 14 times.

“The Intel DL Boost, or special deep learning instructions in the Xeon Platinum 8200 processor can elevate the AI processing performance by up to 14 times,” said Bob Swan, CEO, Intel.

According to Patrick Moorhead, Analyst, Moor Insights & Strategy, the most interesting things about the new Xeons are the addition of Machine Learning capabilities (DL Boost) built into the chip where, when latency counts, is good for specific inference workloads like “Recommendation Engines”.

“Not too many know that CPUs already dominate ML inference usage and this just gave datacenters another reason to continue doing this for certain workloads. This isn’t Intel’s big discrete AI accelerator play as those are slated to become real in 2020,” Moorhead told IANS.

Other chip making giant also announced Intel Xeon Gold Processor (6200 Series) with Networking Specialised (NFVi optimised) SKUs with Intel Speed Select Technology, Intel Xeon Gold Processor (5200 Series), Intel Xeon Silver Processor (4200 Series), Intel Xeon Bronze Processor (3200 Series).